This echoes the broader attempts of demonstrating that (emotional) voice processing and the selective neural activity in the so-called temporal voice areas is not merely determined by particular spectro-temporal acoustic characteristics, often accomplished by a rigorous matching of vocal versus non-vocal low-level cues. A classical, but almost intrinsically paradoxical challenge in vocal emotional neuroscience, is the demonstration that emotion discrimination is not purely driven by low-level acoustic cues. For instance, sad speech is generally lower in pitch, and this is the case across different languages and cultures. Vocal emotion categories are indeed characterized by particular auditory features. Gating paradigms have indicated that different vocal emotions are recognized within a different time frame (e.g., fear recognition happens faster than happiness), thereby suggesting that the fast recognition relies on emotion specific low-level auditory features. The fast decoding of emotion prosody is not only found in humans but is also visible in a variety of other animals, which indicates that recognizing emotions from voices is an important evolutionary skill to communicate with conspecific animals. Explicit behavioral emotion recognition may take a bit longer, ranging from 266 to 1490 ms, depending on the paradigm and the particular emotion. An ERP study demonstrated a neural signature of implicit emotion decoding within 200 ms after the onset of an emotional sentence, suggesting that emotional voices can be differentiated from neutral voices within a 200 ms timeframe. Emotion recognition also happens extremely fast and based on limited auditory information. The recognition of vocally expressed emotions happens automatically : we cannot inhibit recognizing an emotion in a voice, for instance when talking to someone who recently got fired or, in contrast, who just got a promotion, we can identify the emotional state of this person as sad or happy within a few hundred milliseconds, even without any linguistic context. Eventually, here, we present a new database for vocal emotion research with short emotional utterances (EVID) together with an innovative frequency-tagging EEG paradigm for implicit vocal emotion discrimination. These findings demonstrate that emotion discrimination is fast, automatic, and is not merely driven by low-level perceptual features.

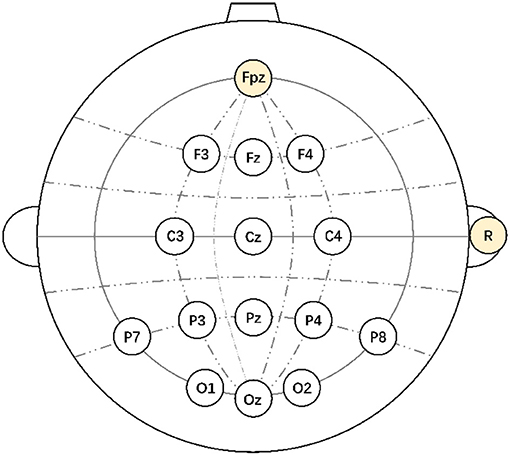

Results revealed significant oddball EEG responses for all conditions, indicating that every emotion category can be discriminated from the neutral stimuli, and every emotional oddball response was significantly higher than the response for the scrambled utterances. This scrambling preserves low-level acoustic characteristics but ensures that the emotional character is no longer recognizable. To control the impact of low-level acoustic cues, we maximized variability among the stimuli and included a control condition with scrambled utterances. Four emotions (happy, sad, angry, and fear) were presented as different conditions in different streams. We recorded frequency-tagged EEG responses of 20 neurotypical male adults while presenting streams of neutral utterances at a 4 Hz base rate, interleaved with emotional utterances every third stimulus, hence at a 1.333 Hz oddball frequency.

Psychopy oddball paradigm series#

We tested whether our brain can systematically and automatically differentiate and track a periodic stream of emotional utterances among a series of neutral vocal utterances. Voices convey a wealth of social information, such as gender, identity, and the emotional state of the speaker. Successfully engaging in social communication requires efficient processing of subtle socio-communicative cues.

0 kommentar(er)

0 kommentar(er)